This blog post is based on the paper The Role of Institutional and Self in the Formation of Trust in AI Technologies published in the journal Internet Research, Emerald, ABDC: ranked A, Chartered Association of Business Schools' Academic Journal Guide: Level 3, SSCI.

The adoption of AI technology in Southeast Asian nations (including Singapore, Indonesia, Thailand, Vietnam, Malaysia, and the Philippines) for managing COVID-19 raised significant concerns, according to the Southeast Asia Digital Contact Tracing report by Digital Reach submitted to ASEAN. These concerns revolve around technical vulnerabilities, lack of transparency, and inadequate policy enforcement. For instance, Malaysia introduced multiple applications, some of which were discontinued without proper government endorsement, leading to doubts about their reliability. In Singapore, the TraceTogether app's data security information was not updated, causing doubts on its ability to safeguard user privacy.

Many AI-powered mobile apps emerged following World Health Organization (WHO) guidelines that focused on data-based, clear, and privacy-centric surveillance systems.These apps monitored vaccination statuses and centers, alongside digital entrepreneurial initiatives. UNWTO's urge for responsible tourism amid lifted restrictions, prompted the adoption of AI-powered tools like contact tracing apps and facial recognition tech to ensure safety.

But successful organizational adoption of tech relies on employee acceptance, a step crucial for business or enterprise success. Similarly, consumer adoption plays a pivotal role in the overall acceptance of AI technology. However, despite mandates, hindrances to consumer adoption exist, some inherent to AI applications. During crises, scaling up innovation adoption becomes essential, addressing the gap between implementation and acceptance. Mandated adoption, while narrowing this gap, triggers psychological reactance among users, a focus of this study explored in the context of technology adoption in travel and tourism sectors.

| Can you trust AI? |

|---|

|

Trust, a crucial element in human-technology relationships, can be swiftly eroded by governmental technological inadequacies. To understand this complex landscape, research effortsneed to be focused on identifying factors that foster trust in AI technology usage. This includes considering individual openness, personal beliefs, and the presence of robust institutional frameworks. Designing AI tech with a user-centric approach requires understanding the facilitating conditions and conversion factors to enhance tech uptake. Industries need to pivot towards human-centered and responsible implementation for societal well-being.

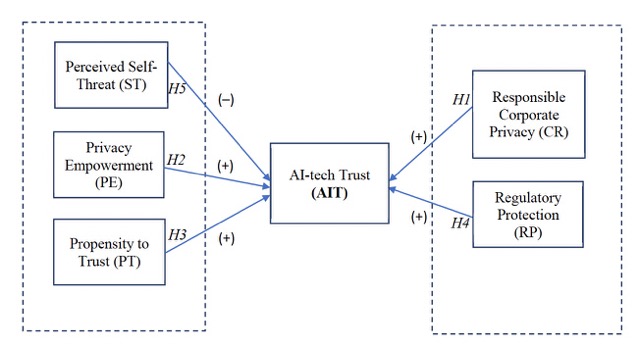

| Conceptual Model |

|---|

|

| Factors affecting trust in mandated adoption of AI based-tech (Wong et al., 2023) |

In the study, perceived self-threat (ST) negatively affected trust while propensity to trust (PT) and regulatory protection (RP) enhanced trust.

In explaining ST to AI tech, the study argued that perceiving AI-tech as a self-threat induces anxiety, impacting trust in AI tech, as evidenced by slow adoption of mandated apps. PT, an inherent trait, determines trust; naturally trusting users readily trust AI-tech or seek more information for AI tech. Additionally, regulatory protection fosters trust: robust regulations reassure users, enhancing trust and preventing negative perceptions.

Mandates imposed by governments, requiring travelers to use these apps, clashed with user freedom-of-choice ideals. Such mandates led to opposing behavior, as users felt a loss of control since they couldn't reject or manipulate information while using AI technology. AI-tech usage presents ethical, legal, and governance challenges. Mass adoption relies on vast private data generation and decision-making, leaving users with limited control and leading to hesitancy due to knowledge gaps, data mishandling fears, and government mistrust. The concept of the "right to be forgotten" varies by location; in some regions, explicit consent isn't necessary, raising concerns about data privacy. Regulations, especially those like GDPR, aim to establish trust and govern AI-tech use, emphasizing the need for ethical, transparent, and accountable practices consistent with user expectations and societal norms.

Interestingly, the study found that users seemed willing to trade their privacy concerns for the freedom to travel, making rational choices in information disclosure. This trade-off was notable, especially in situations where generic scanners or logbooks were used during the early stages of the pandemic, allowing for potential data fabrication and, in a way, restoring users' sense of freedom. The hypothesis for privacy empowerment (PE) was not supported.

| Trade-off between privacy and freedom to travel |

|---|

|

| "Travel users would have made a rational choice in information disclosure despite having privacy concerns." (Wong et al., 2023) |

Furthermore, the study highlighted that efforts to persuade users (CR) and regain control through technology might have backfired, leading to skepticism. The rapid repurposing of technological solutions during COVID-19, coupled with issues like security fatigue, privacy helplessness, and privacy fatigue, further complicated user acceptance. For instance, in Germany, despite sincere attempts to build trust through transparency, citizens remained resistant to using tracking apps, reflecting broader disillusionment with security and privacy measures.

Implications

Balancing the directives set by institutions with the evaluations of trust made by tourists is a pivotal factor in revitalizing the tourism industry. The success of AI-tech in this context depends on the synergy between users and the technology, focusing on data sources, algorithms, training, and deployment decisions. Successfully overcoming the initial resistance towards AI technology is imperative in managing reactance effectively.

Crucially, businesses can foster passive acceptance of AI-tech by aligning technological engagement with user expectations and preferences. By proactively sharing essential information beforehand, companies can present AI-tech not as a cumbersome imposition but as a voluntary, value-adding step that enhances personal freedom.

| Trust Formation |

|---|

|

| Transparent communication plays a central role in fostering passive acceptance of AI-tech by aligning technological engagement with user expectations and preferences. |

Moreover, tackling resistance necessitates tailored strategies. Educating tourists about security measures and potential data risks is vital. Implementing robust support systems that respond promptly to users’ concerns not only instills confidence but also nurtures trust over time.

Prioritizing clarity around security and privacy issues further amplifies user trust. Collaborating actively with regulatory bodies, businesses can demonstrate their commitment to safeguarding user welfare. This partnership ensures a cohesive approach, enhancing the overall sense of security for users.

Ultimately, trust formation in the context of AI-tech usage involves an interplay of numerous factors. When customer expectations align with the perceived performance of AI-tech safeguards, trust naturally develops. By acknowledging and addressing these elements, businesses can effectively cultivate trust, paving the way for a successful integration of AI technology in the tourism sector.

Reference

Wong, L.-W., Tan, G.W.-H., Ooi, K.-B. and Dwivedi, Y. (2023), "The role of institutional and self in the formation of trust in artificial intelligence technologies", Internet Research, Vol. ahead-of-print No. ahead-of-print. https://doi.org/10.1108/INTR-07-2021-0446